One of the keys to operating a secure and well-running platform is testing. Funda’s Quality Assurance Lead Jome Maria Princil shares why we said goodbye to Selenium and instead embraced Playwright to test the functionality of our user interface. She tells us how too!

“Not again”, I thought to myself as I headed over to Stephen’s desk. He had called me over, as a number of Selenium tests were failing during his deployment to production. We were using Selenium to test the functionality of our website on the UI, as end-to-end tests. After debugging the test for a while, we ended up ignoring it. The page looked ok, but the test was failing. It was a typical scenario where the test was too fast for the page.

This was way back in 2019, in the pre-COVID days. Over that year, we held a number of surgeries trying to save the Selenium tests. We added retry mechanisms, broke down a number of complex tests, and added warm-ups as a precondition. However, in the end, after a number of false negative instances, we decided to sunset the tests.

The trouble with the Selenium tests

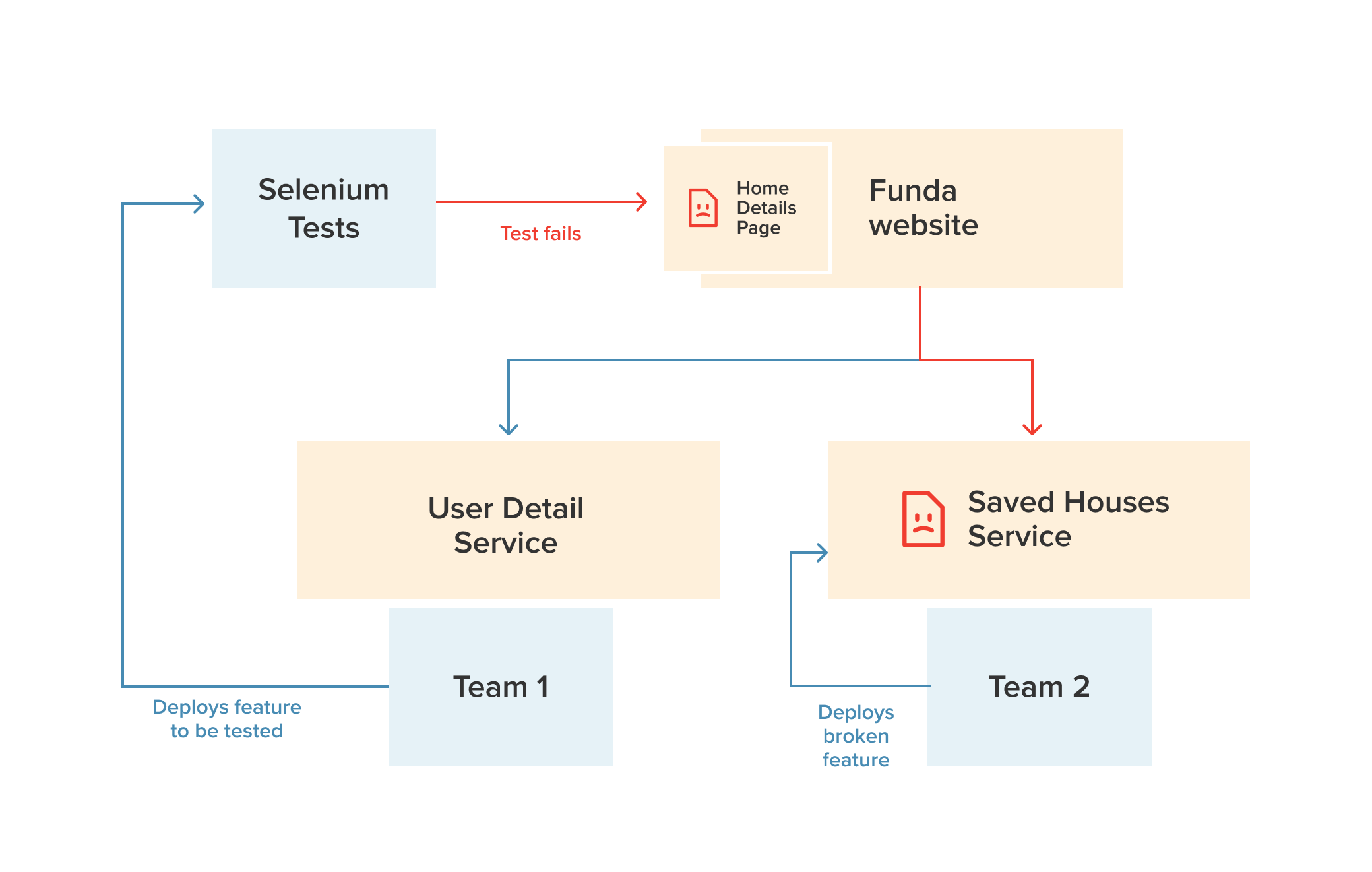

Monolith testing: We had the Selenium tests running on the deployment of the website together with AngleSharp tests. Now, the website repository is a big monolith, with many external dependencies for each page. Moreover, the tests ran on our team environments and the acceptance environment with each deployment of the website to production. This meant if a dependent API or service was not working when the tests were running, we would get a failure. We had cases where a team was releasing the website and another team was trying out a feature on an unrelated but dependent service, so tests that were completely different to what was being deployed on the website were failing, as shown in figure 1 below. Granted, this often resulted in interesting conversations between teams, but it also led to the perception that the tests were more of a hindrance than a help.

No easy integrated way of mocking external calls: Now, it could be easy to assume that we could have gone through our Selenium tests and mocked the calls to external systems. Unfortunately, Selenium did not, at that moment, have the ability to easily mock external calls. They do mention it on their encouraged to-do guide but provide no integrated tool for this purpose. We could have tried to mock calls with tools like WireMock. However, that would have required more effort from the team, and the effort we would have put in would not have paid off, especially with our ambition to move to a microservice architecture.

Tests didn’t really wait for the page to be loaded: In the Selenium tests, we wrote code to wait for the page to be loaded before performing any actions on the page. A scenario we would encounter very often was that the test would be notified that the page was loaded and all elements were available, and it would proceed while there were still calls happening in the backend. This led to tests failing, as they expected an element to be present, but the element was not yet there. We went from using explicit waits to check if an element was ready, to adding retry mechanisms using tools like Polly and finally adding page warm-ups and health checks. However, it felt like an over-engineered solution to a simple problem.

Testing in a microservice architecture

Funda decided to move to a microservice architecture, with the benefits it brought. With this decision, we also had to rethink the way we did our testing. Continuing testing at an integrated, end-to-end level would lead to more issues if dependent systems were not working. We would end up with a distributed monolith, where systems were technically separated, but releasing would be done on the monolith, giving us no advantage of splitting into microservices. With the new architecture, we would split backend and frontend systems, enabling us to test the UI at the scope of the frontend, and add backend and API tests for the backend systems. This would simplify and stabilize our tests.

Why Playwright?

So, we decided to try out a new tool to do our UI testing for the front-end systems in the features we broke out from the website monolith. After considering several options, we ended up choosing Playwright, thanks to the advantages it offered, as discussed below.

Tests ran on build: With Playwright, we host our application under test in a locally running webserver and test against it. This eliminates the need to deploy to an environment, as we can now run the tests on build time. This fits our ambition to test first, faster. If there is a problem in the feature we are developing, we should know about it as early as possible.

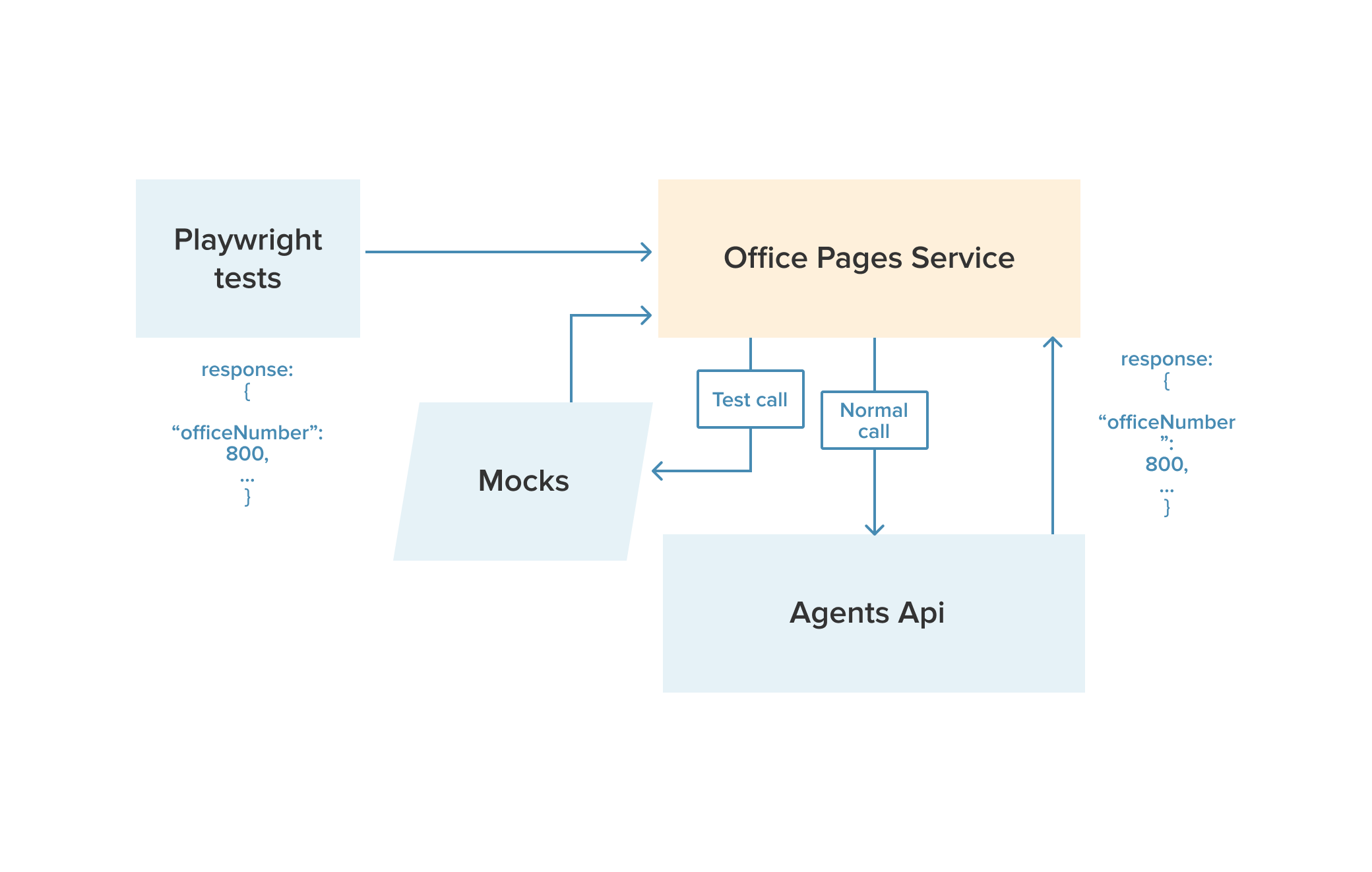

Easy to mock external calls: Playwright offers an integrated way to mock external calls – see figure 2 below. This makes the tests more robust and less dependent on external systems. So, even if an external system is not working, the tests will not fail. True, this makes the Playwright tests less of an end-to-end test and more of a ‘Frontend component test’, but for the purpose of testing the functionality within the application/service, it suffices.

Autowaits: The Playwright tests are more stable because Playwright waits for an element to become truly ready before it performs an action on that element. This avoids code having to be written to check for the visibility of the element or to retry the action in case the element is not yet ready.

Developers embrace the tests: With the Selenium tests, developers did not run the tests locally while developing. This was because it required setup to be done to point to an environment, and running the tests took a long time. With the Playwright tests, developers can run the tests (albeit with a different command) like the unit tests. We now see developers running the Playwright tests, fixing them, and extending them.

How we phased out the Selenium tests

Part of our QA goals was the desire to proceed fast and well. This meant we wanted to ensure that quality checks did not necessarily have to come at the expense of a greater lead time. With this in mind, we concluded that the Selenium end-to-end tests did not help with moving faster. We saw over the course of the year that 30–40% of the Selenium tests failed due to flakiness, so we decided to phase out these tests.

We did this with the steps below:

1. We ran a month-long experiment disabling the Selenium tests on the acceptance environment, leaving only the static Anglesharp tests running. We left the Selenium tests running on team environments. We monitored how many test failures we had on team environments and how many bugs slipped into production.

The results of our experiment were as follows:

- The Selenium tests did catch some bugs (4 separate failures) in the team environments while the experiment was running.

- In half of the cases, the AngleSharp tests also caught the failure.

- There were no failures in production due to the Selenium tests being disabled on acceptance.

2. With the positive results of the experiment, we decided to investigate if we could move some of our Selenium tests to lower levels. We analyzed our most important tests and added Javascript unit tests that would mitigate the risk. We also extended our .NET unit tests in some cases. In cases where an end-to-end test seemed valuable, we added AngleSharp Javascript tests instead of the Selenium test.

3. We got rid of our Selenium suite on the website repository, leaving only the Anglesharp tests. While we moved functionality to separate systems from the website repository, we added Playwright tests in the new repository.

The situation now

We, as QA engineers, find ourselves being called to resolve a failing test on the website less often than before. In the rare event this happens, it is often due to missing test data on the environment, or sometimes because something is really broken. Of course, the remaining Anglesharp tests are susceptible to dependent services being down as well, but this is now the exception more than the rule.

With the Playwright tests, we find ourselves pairing with the developers to extend the tests, and speaking the same language as these developers, leading to a whole team approach to testing. With the move to be less dependent on end-to-end UI tests, we see people doing more exploratory testing, without the mentality of “If something’s broken, the Selenium tests will catch it”.

Overall, the move from Selenium to Playwright has been a good one!